Econsultancy recently posted a comprehensive review of best practices for e-commerce consumer surveys by Tim Leighton-Boyce. It’s an excellent piece. The writer is obviously a practitioner, since the advice reflects knowledge that can only have come the hard way. One piece of that advice, however, is fundamentally flawed. In the section “Where to place the survey”, Tim writes:

“Although there are great systems for allowing feedback surveys on every page of your site, I’m not in favour of using any form of pop-up which might distract your visitor from whatever they want to do.

Instead, my favourite type of e-commerce survey is one embedded in the order confirmation page. I like these because there is zero risk of distracting someone from placing an order since the survey is only offered once the sale is complete.”

Tim anticipates that this will raise objections, so he adds:

“The obvious objection is that this means you don’t get any survey entries from people who did not intend to buy or were unable to buy. That’s a common-sense point. But in reality it doesn’t seem to be a problem.

… In real life it turns out that people who have problems buying can be remarkably tenacious. Some will eventually find what they want, or make it through a tricky checkout, and then let you know all about the problems when they get to the survey comments form.”

It may, indeed, be a valid assumption that problems experienced by those who complete transactions are the same as the problems of those who abandon the site or fail to complete a transaction. But how do you know for sure? More importantly, how do you quantify the impact of those problems? How do you measure the revenue loss they inflict? How do you determine their root cause? How do you set priorities in taking remedial action?

Failure data may be the most valuable kind you collect

As convenient as it may appear, surveying only those who emerge from the confirmation page necessarily skews the sample and presents a distorted picture of the user experience, especially the experience of visitors who fail. Visit failure data may be the most valuable data a site can investigate because beneath that cumulative experience lie the root causes of conversion impediments. Intercepting visitors at the start of their journey through your website ensures that you include those who fail as well as those who transact. For most e-commerce sites, the proportion of those who do not purchase far exceeds those who do. The process of identifying whom the site fails, where it fails them, and why it fails them offers the most direct route to continuous improvement.

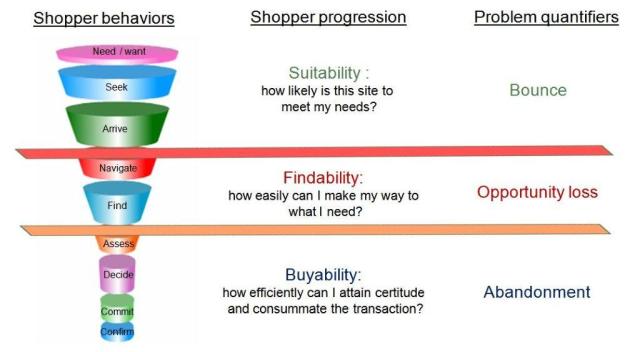

Behavioural and attitudinal feedback from hundreds of thousands of survey respondents over the last decade reveals patterns applicable to any e-commerce site. This is what they look like:

They start with some notional depiction of shopper behaviour – the sequence of thoughts or actions they evidence when shopping online.

These steps can be grouped into three basic user decision points:

Suitability – is this site likely to meet my needs?

Findability – how easily can I make my way to the product or information I seek?

Buyability – how easily can I reach certitude and then complete the transaction?

Visitors who fall out of the funnel at the site suitability level represent (in our classification) the problem of Bounce.

Visitors who fall out of the funnel at the findability level represent Opportunity Loss.

Visitors who fall out of the funnel at the buyability level represent the Abandonment problem.

These problems are what site owners must identify, quantify, analyze, and address if they are to systematically attack visit failure and its impact on conversion. Sampling the visitor population only from those who successfully navigate their way through to the confirmation page makes this process inordinately difficult, if not impossible.

Continuous improvement is not just a tool; it is a philosophy and a strategy. If a site is going to commit to a continuous improvement process, it should ask visitors to participate from the outset of their journey, so that it captures the full spectrum of site experiences and outcomes. That’s where analysis starts and systematic improvement begins.

–Roger Beynon, CSO

.jpg)

Think of your site visitor’s comments and responses to your survey questions as raisins in a loaf of raisin bread. One slice of the bread will give you several raisins, yes, just as will a briefly presented online survey, but those raisins (and that particular survey) may not bring you all the information you need to make a business decision about your company website. It takes the entire loaf, and optimally an entire year of data collection to get the whole story…

Think of your site visitor’s comments and responses to your survey questions as raisins in a loaf of raisin bread. One slice of the bread will give you several raisins, yes, just as will a briefly presented online survey, but those raisins (and that particular survey) may not bring you all the information you need to make a business decision about your company website. It takes the entire loaf, and optimally an entire year of data collection to get the whole story…